Mixture of Tokens

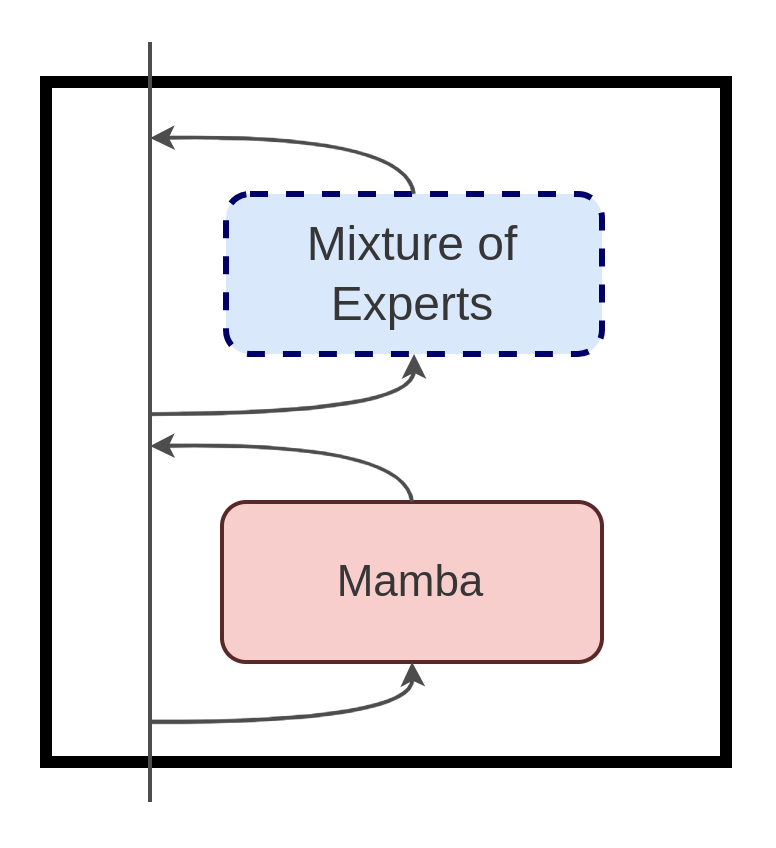

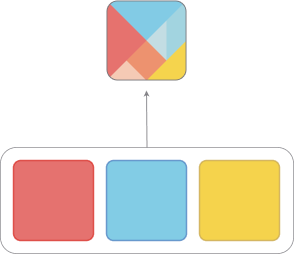

We introduce Mixture of Tokens, a new, fully-differentiable Transformer architecture that builds on top of Mixture of Experts, while avoiding its problems. It achieves the same performance as the vanilla Transformer with \(3\times\) wall-clock speedup and \(4\times\)FLOPS reduction.

No matching items